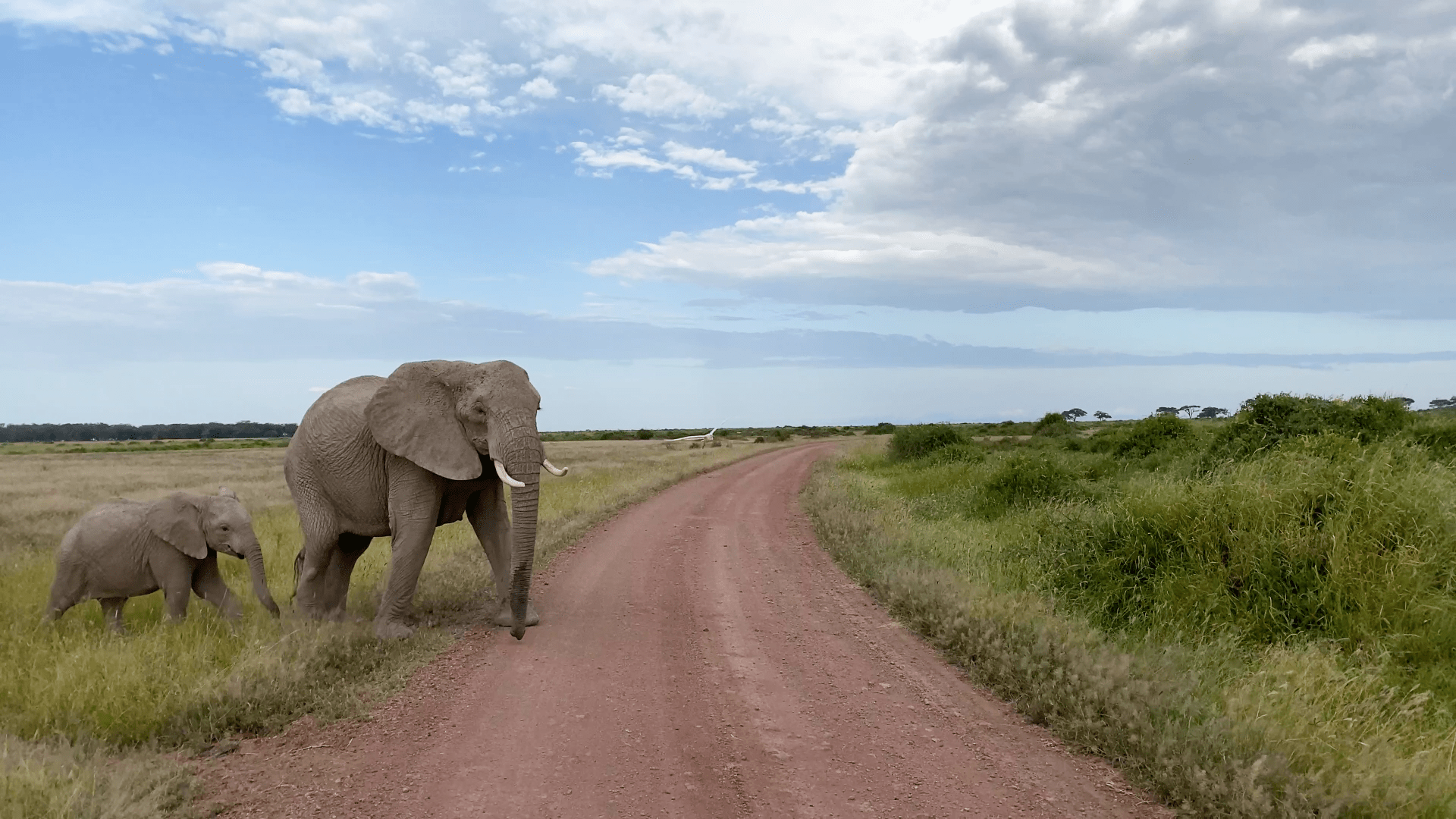

One day, in the early 1980s, Joyce Poole drove her small green jeep out into the open grasslands of Amboseli National Park in Kenya to observe African savanna elephants. She was approached by a male elephant in “musth”, a period of heightened sexual and aggressive activity.

The animal flapped his ears and made a rumble like the sound of water gurgling through a deep pipe. Poole knew this noise should signal a threat but she could barely hear it. “I started thinking, OK, maybe it’s not audible to me, but it is very audible to them.”

Recordings of the elephants confirmed her hunch. Viewed as spectrograms — a visual “heat map” of sound waves that shows volume and pitch — she and her co-researcher Katy Payne discovered that a great deal of elephant vocalisations take place in infrasound, or below the range of human hearing.

Poole, 67, has now been studying elephants for five decades. She is convinced the animals are capable of complex communication, using low-frequency sounds to stay in touch from afar. Through her years of study, she has managed to identify the meaning of certain calls.

Listen to the elephant sounds

Trumpet blast

High-pitched and short, elephants use trumpet blasts to ward off predators, but also in play. AI may detect the difference

Greeting rumble

Individual greeting rumbles are used in close proximity — different from greeting ceremonies, when family calls overlap

Musth rumble

A very low frequency sound, musth rumbles are only produced by adult males during sexual and aggressive periods

Yet there is a great deal about elephant communication that she still doesn’t know. Most difficult to untangle are overlapping calls when, in a birth or mating ritual, a group of elephants will talk over each other. Unstitching this cacophony of rumbles is near-impossible — especially when so much of the sound is emitted below the lower limit of human audibility.

However, a new tool reshaping many aspects of the human world could also transform our understanding of the animal kingdom: generative artificial intelligence.

Researchers hope that the same technology that is powering ChatGPT will allow us to reach into the non-human world, and begin to understand — even speak — animal languages.

Two years ago, an organisation called Earth Species Project contacted Poole hoping to train their algorithms on her dataset. The non-profit is just one of several organisations opened in recent years with the aim of translating animal languages.

But building the Rosetta Stone of the animal kingdom requires more than just feeding elephant rumbles, fish grunts, orangutan howls and bat squeaks into a machine. If AI is successful in finding meaning in the data, scientists will have to grapple with how to translate those signals into the human realm.

It is still unclear how far our advances in computer processing power can penetrate the secret world of animals. But if the effort even partially succeeds, it could radically alter our perception of the billions of other creatures we share the planet with, while also raising thorny ethical questions about how we use this information.

For researchers like Poole, it will also serve as validation. “Having studied elephants for so long . . . and feeling that they really are autonomous, intelligent animals who have discussions with one another,” she says, “I am so excited that AI may be able to show this.”

In 1974, American philosopher Thomas Nagel asked in a renowned paper, “What is it like to be a bat?”

In attempting to lend philosophical scrutiny to a question that has dogged humans from King Solomon to Dr Dolittle, he concluded that our attempt to comprehend animal experience from the framework of the human mind and body was doomed to fail.

In order to remove the human goggles, he wrote, researchers must put themselves in the umwelt, or worldview, of an animal. When it came to bats, he argued, this was impossible, as humans could never experience the world in a bat’s body.

“But he wrote those arguments before the advent of digital technology,” said Karen Bakker, the late professor and author of The Sounds of Life, in an FT Tech Tonic podcast recorded before her death. “You and I could never echolocate like a bat, trumpet like an elephant, buzz like a bee. But our computers can.”

Already, technological advances have granted us fresh insights. As microphones become more affordable and portable, scientists are uncovering a new world of sound.

Unhatched turtles use sonics to co-ordinate their hatching from within their shells, healthy soil emits a cacophony of noises from worms and bugs, and coral larvae can hear the sound of their home reef from across the ocean.

“Sonics is the equivalent of optics insofar as it decentres humanity within the tree of life,” Bakker explained. Just as the telescope opened up a new world of scientific discovery, microphones and AI may do the same.

‘Frequency’ refers to the number of repetitions on a wave in a given timespan. For soundwaves, shorter wavelengths are perceived as higher-pitched sounds; longer wavelengths as lower-pitched sounds.

Use the toggle below to adjust the soundwave frequency:

Humans can perceive sounds with frequencies between 20Hz and 20,000Hz, though we often lose the ability to hear the very highest pitches as we age.

The natural world teems with activity outside the range of human hearing, from mice or bats communicating in very high, or ultrasonic frequencies . . .

. . . to the very low, infrasonic rumbles produced by elephants, whales or volcanoes.

Spectrograms allow us to visualise activity across a full range of frequencies, including those that we cannot hear.

In this visualisation of an elephant rumble recorded by Joyce Poole, for example, much is going on below the surface of human audible frequencies. This infrasonic noise can be heard by elephants up to 10km away.

Recordings of bat noises from a cave, meanwhile, reveal high-frequency ultrasonic activity, similarly imperceptible to the naked ear.

As humans tune into the nonhuman world, some of our practices are changing. Off the coast of Canada, microphones detect the live locations of endangered North Atlantic right whales, diverting or slowing shipping routes to avoid deadly collisions.

Last year, biologist Lilach Hadany discovered that plants in distress make noises — the click of a dry tomato plant sounds different from that of a dry wheat plant. Hadany says commercial agriculture is interested in her findings: if farmers could hear which plants were diseased, then pesticides would only be needed for a small portion of the crop.

As the number of AI tools increases, providing researchers with new ways to tune into the animal kingdom, goals that once seemed fantastical may now be within reach.

Aza Raskin, a Silicon Valley entrepreneur, got the idea for Earth Species Project 10 years ago while listening to the radio. A scientist was describing her process of recording and transcribing what she believed to be one of the richest languages of primates — the sounds of gelada monkeys.

“Could we use machine learning and microphone arrays to understand a language we’ve never understood before?” Raskin remembers pondering.

In 2013, machine learning was not advanced enough to translate a language where no prior examples existed. But that started to change four years later, when researchers at Google published “Attention Is All You Need” — the paper that paved the way for large language models (LLMs) and generative AI. Suddenly, Raskin’s idea of translating animal languages “without the need for a Rosetta Stone” seemed possible.

The core insight of LLMs, explains Raskin, is that they treat everything as a language whose semantic relationships can be transcribed as geometric relationships. It is through this framework that Raskin conceptualises how generative AI could “translate” animals for humans.

Raskin suggests thinking of English as a ‘galaxy where every star is a word. Words that have similar meanings are close to each other in the galaxy’.

This representation is known as an embedding space.

The word ‘king’ is the same distance and direction from ‘man’ as ‘queen’ is from ‘woman’. This vector might represent regality.

Other languages — take Spanish and Russian — can be mapped geometrically in the same way, even if things don’t line up identically.

To translate into another language, the two embedding spaces are rotated until they overlap, and the points within them coincide and can be translated between.

Translation comes, therefore, not from a pre-existing understanding of the meaning of the words, but from the relationships between the words as observed through usage.

If the data sample is large enough, no prior understanding of a word is needed to translate its meaning.

Nonetheless, even if armed with a comprehensive embedding space or galaxy for elephant language, how would we begin to translate when our physiologies and worldview are so different? Could there be pockets of experience that are shared between animals and humans, like love, grief and joy?

Raskin thinks so — and if AI can identify the areas where these experiences overlap then a piece of the code could be cracked.

Self-awareness is a concept humans share with elephants and dolphins, for example. Getting high is also not just a human pastime: lemurs take bites of millipedes and “enter a trance-like state”, Raskin says. “Dolphins intentionally get puffer fish to inflate and get high off of their venom and pass it around — literal puff, puff, pass.”

Generative AI is not merely a potential translation tool. It uses existing data to produce new information across a variety of media, such as text, images and audio. That’s why ChatGPT can answer questions, and algorithms can generate images or write music.

Already, Earth Species Project is experimenting with synthetic animal sounds. In the same way a human voice can be deepfaked, new chirps, rumbles or howls can be artificially generated by machine learning.

Like this chiffchaff call. The first clip is a recording of the birds — the second is a call generated by AI.

But it is the capacity for moving between modalities that gives Raskin hope that transformers can truly break into the umwelt of other creatures. He believes that, with the right data, they could show us the ways in which non-verbal and verbal communication are intertwined.

Yet if generative AI can interpret all manner of animal communication modes, what that looks like for a human-intelligible translation is still unclear. “Maybe the translation ends up not looking like a Dr Dolittle or Google translator where you get specific words, but maybe it ends up as flashes of colour and some sound, and you get a sort of a felt sense of what maybe they mean,” Raskin says.

The obstacles are formidable. First, many recordings of animal social interactions contain overlapping voices, which, as Poole found in elephant families, can be hard to separate.

This is known as the “cocktail party problem”, and Earth Species Project has already had some success using AI to separate the sounds of overlapping monkeys barking.

Second, the volume of data needed to train generative algorithms is vast: GPT-3 was trained on billions of words — data that could also be understood and verified by the scientists running the algorithms.

Earth Species Project relies on datasets from researchers like Poole. Yet even after decades recording and filming elephants, she has only captured a fraction of the data needed to comprehensively train generative AI.

This limitation is exactly what the Cetacean Translation Initiative, also known as Project Ceti, is trying to tackle. Off the coast of Dominica in the Caribbean, the alliance of animal scientists and machine learning experts is installing a continuous recording set-up to capture the social interactions of sperm whales.

Sperm whales, which have the largest brains of all animals, gather at the surface of the water in family groups and talk using morse code-like sequences of clicks known as codas.

It is these codas that David Gruber, the founder of Project Ceti, and Shane Gero, lead biologist with the project, want to understand. For two decades Gero has been recording sperm whale conversations by throwing a hydrophone — an underwater microphone — over the side of his boat.

This limited recording process, he worries, gives him a narrow view of sperm whale life. He calls it the dentist office problem: “If you only study English-speaking society and you’re only recording in a dentist’s office, you're going to think the words root canal and cavity are critically important to English-speaking culture, right?”

Ceti’s AI team tested algorithms on Gero’s recordings to see if they were capable of recognising where one coda stopped and another started. If they fed the machine 20 per cent of what they had, they found that it could predict outcomes for the remaining 80 per cent, in the same way that predictive text can anticipate the next words in a sentence. Initial results were 95 per cent accurate. What could they achieve, Gruber wondered, if they could quadruple the volume of data?

In Dominica’s seas, Ceti are installing microphones on buoys. Robotic fish and aerial drones will follow the sperm whales, and tags fitted to their backs will record their movement, heartbeat, vocalisations and depth. With this new recording setup Ceti predicts they could accrue 400,000 times more data than Gero already has, every year.

Understanding what sperm whales say shows us what’s important to them, Gero says. Already without the help of AI, researchers have learnt that sperm whales use dialects.

A group of sperm whales found off the coast of Dominica self-identifies using the 1+1+3 coda. That’s two evenly spaced clicks and then three clicks in quick succession. Another clan, usually found around Martinique and St Lucia, self-labels by emitting five evenly spaced clicks, known as the 5R.

For Gero, this shows that animals’ social lives shape what they communicate. “There’s this importance of who you are, where you come from and with whom you belong.”

Like humans, sperm whale calves are not born with language — it takes them two years of babbling to correctly emit an identity coda. The AI model that Project Ceti is developing will go on a similar learning curve, babbling until it produces distinguishable sounds.

Gašper Beguš, the linguistics lead at Project Ceti, says their algorithms won’t provide immediate translations of codas, but instead show them which calls are likely to be meaningful to the whales, narrowing their research focus.

Could these kinds of advances pave the way for a Google Translate that renders whale sounds into human words? Zoology professor Yossi Yovel is sceptical.

Yovel runs a department at the University of Tel Aviv called the Bat Lab. Eight years ago, before the advent of large language models, he used machine learning to show that Egyptian fruit bats communicate through aggressive calls; although not audible to humans, a call made about mating sounds different from one about sleep, eating or personal space.

Now he plans to run the same data through generative AI to see if it throws up new results, but he is not optimistic that the technology will revolutionise the field.

For Yovel, the issues boil down to two things: human perception is limited, and animal communication is limited. “We want to ask animals, how do you feel today? Or what did you do yesterday? Now the thing is, if animals aren’t talking about these things, there’s no way [for us] to talk to them about it.” Communication, Yovel argues, may not be the gateway to an animal’s interior life.

Then there’s the question of umwelt. Many animals use scent to convey information, some use electrical fields, others stridulate (make noise by rubbing body parts together like a cricket), and some create seismic waves.

While technology may enable humans to capture these more unexpected communication modes, for Yovel the field is still dependent on human interpretation. A lot of the training data for generative AI models is based on datasets labelled by human researchers.

“I’ll write that the bats are fighting over food, but maybe that’s not true,” Yovel explains, “maybe they’re fighting over something that I have no clue about because I am a human. Maybe they have just seen the magnetic field of earth, which some animals can.” Removing the bias of human interpretation, Yovel says, is impossible.

Loading...Others argue that if enough relevant data is gathered, AI would eliminate that bias. But wild animals don’t tend to co-operate with human data-gathering endeavours, and microphone technology has its limits. “People think we’ll measure everything and put it into AI machines. We are not even close to that,” says Yovel.

Poole says she has spoken to Ceti’s David Gruber about how to collect data on elephants in a non-invasive way: “Sperm whales have robotic fish, could we have robotic egrets or something?”

Yet even if enough of the right data could be run through generative AI, what would humans make of the computerised rumbles and squeaks, or scents and seismic waves?

What is meaningful to an algorithm may still be unintelligible to a human. As one researcher put it, translation is contingent on testing what AI has learnt.

Traditionally, this is done through a playback experiment — a recording of a vocalisation is played to a species to see how they respond. Yet, getting a clear answer on what something means is not always possible.

Yovel describes playing back recordings of different aggressive calls to the bats he studies, and each call being met with a twitch of the ears. “There’s no clear difference in the response and it’s very difficult to come up with an experiment that will measure these differences,” he says.

With generative AI, these playback experiments could now be done using synthesised animal voices, a prospect that thrusts this research into a delicate ethical balance.

In the human world, debates around ethics, boundary setting and AI are fraught, especially when it comes to deepfaked human voices. There is already evidence that conducting playbacks of pre-recorded animal sounds can impact behavioural patterns — Earth Species founder Raskin shares an anecdote about accidentally playing a whale’s name back to itself, to which the whale became distressed.

The unknowns with using synthetic voices are vast. Take humpback whales: the songs sung by humpbacks in one part of the world get picked up and spread until a song that emanates from the coast of Australia can be heard on the other side of the world a couple of seasons later, says Raskin.

“Humans have been passing down culture vocally for maybe 300,000 years. Whales and dolphins have been passing down culture vocally for 34mn,” he explains. If researchers start emitting AI-synthesised whalesong into the mix “we may create like a viral meme that infects a 34mn-year-old culture, which we probably shouldn't do.”

“The last thing any of us want to do is be in a scenario where we look back and say, like Einstein did, ‘If I had known better I would have never helped with the bomb,’” says Gero.

There are other ethical questions. Who should be allowed to speak on behalf of humans? Who can be trusted to act in the animals’ best interests? What kinds of protections should be placed on the data to ensure it isn’t used against those interests — for hunting or poaching?

This is a field in its infancy, and its ethical boundaries are not yet fixed. Actions taken now will shape this discipline for decades to come. For Gruber, Project Ceti is more about listening and building empathy. “The last thing I think humans need to do is to start talking at animals.”

In any case, many of the concerns about talking to animals are still hypothetical. Project Ceti is only just installing its autonomous recording equipment. Earth Species Project is at the start of its work with algorithms. Poole has a great deal more data to label.

“We may be able to do simple things, like issue better alarm calls or better interpret the sounds of other species,” said Bakker. “But I don’t think we’re going to have a zoological version of Google Translate available in the next decade.”

Yet even without technology, the veil between the human and nonhuman worlds is already permeable, especially for those paying attention. Elephants in Amboseli greet Poole when they see her. Gero suspects the sperm whales in Dominica recognise his team’s research vessel.

One day, Gero was out on the boat watching sperm whales dive. Each time one submerged, he would approach that spot to collect samples from the water’s surface. One whale — known to the researchers as Can Opener — appeared to realise what was happening, he recalls.

The whale dived, but immediately resurfaced, rolling its eye out of the water to look at the biologists who, giddy with excitement, ran up and down the boat looking back at it. “To me, that says a lot about who a whale is and what they’re thinking about without being able to show it empirically,” he says.

Listen to the Tech Tonic podcast series on using AI to speak to animals.

Visual storytelling team: Irene de la Torre Arenas, Sam Learner, Sam Joiner.

Notes and sources: Audio clips provided by Earth Species Project, Project Ceti, Elephant Voices, The Bat Lab for Neuro-Ecology and Professor Lilach Hadany, both part of the University of Tel Aviv, Claire Spottiswoode, Ben Williams and Voices in the Sea. Datasets for automatic acoustic identification of individual chiffchaffs provided by Zenodo.

Visualisation of embedding spaces inspired by Earth Species Project’s “How to translate a language” presentation. The sound of a tomato plant and wheat have been sped up to bring them into the range of human hearing.

Additional reporting by John Thornhill.